Qualitative Results (SpaceNet6 Dataset)

Qualitative Results (SAR2Opt Dataset)

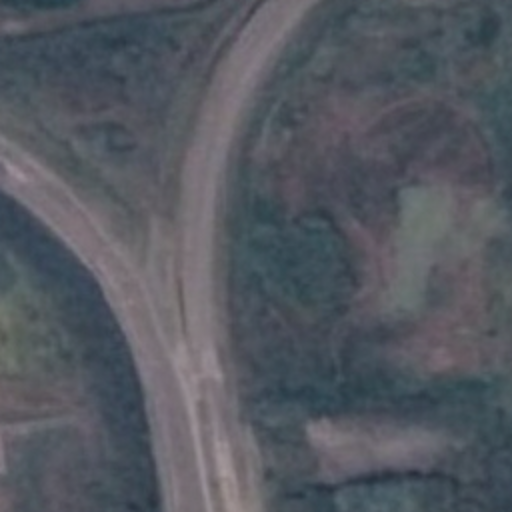

Qualitative Results (QXS-SAROPT Dataset)

Abstract

We propose Confidence Diffusion for SAR-to-EO Translation (C-DiffSET), the first framework to fine-tune a pretrained LDM for SAR-to-EO image translation (SET) tasks, effectively leveraging their learned representations to overcome the scarcity of SAR-EO pairs and local misalignment challenges.

We introduce a novel C-Diff loss that can guide our C-DiffSET to reliably predict both EO outputs and confidence maps for accurate SET.

We validate our C-DiffSET through extensive experiments on datasets with varying resolutions and ground sample distances (GSD), including QXS-SAROPT, SAR2Opt, and SpaceNet6 datasets, demonstrating the superiority of our C-DiffSET that significantly outperforms the very recent image-to-image translation methods and SET methods with large margins.

Motivation of C-DiffSET

Left. Examples of misalignments in paired SAR-EO datasets. Local spatial

misalignments caused by sensor differences or acquisition conditions. Temporal misalignments

(discrepancies) where objects (e.g., ships) appear or disappear between SAR and EO images

due to their different acquisition times.

Right. Results of applying the VAE encoder and decoder from LDM to EO and SAR images.

The first row shows input images, including EO and SAR images with different levels of

speckle noise. The second row presents the corresponding VAE reconstructions, illustrating

that both EO and SAR images are accurately reconstructed despite noise variations.

Overview of C-DiffSET

Left. Training strategy for the diffusion process.

Right. Inference stage for EO image prediction.

Quantitative Evaluation

Quantitative comparison of image-to-image translation methods and SET methods on SAR2Opt and SpaceNet6 datasets. Our C-DiffSET to attain the highest PSNR, SSIM, and SCC values, along with the lowest LPIPS and FID scores.

Left. Quantitative comparison of image-to-image translation methods and SET methods

on the QXS-SAROPT dataset.

Right. Ablation study on the SAR2Opt dataset evaluating the impact of pretrained LDM

and C-Diff loss.

Qualitative results of image-to-image translation methods and SET methods. Our C-DiffSET achieve superior structural accuracy and visual fidelity compared to very recent methods.

Analysis of Confidence Output

Confidence maps generated on the training dataset. Using this confidence map, C-Diff loss enables the U-Net \( \psi \) to down-weight uncertain regions where objects are temporally misaligned across modalities, thereby reducing artifacts and ensuring coherent EO outputs.